Section 4 Acoustic space use

In this study, acoustic space use reflects the amount and pattern of vocalizations within each frequency bin for a given time period.

Previous papers calculated acoustic space use by ascertaining the proportion of recordings in a particular time and frequency bin that is above a certain amplitude. However, this proportion was estimated by counting the number of frequency peaks within a given recording (see seewave::fpeaks()) and then scaling it to go from 0 to 1. In our analysis, we want to obtain an understanding of the overall acoustic activity for a given time and frequency bin. In other words, we want the area under the curve for a particular frequency contour.

Note From Sound Analysis and Synthesis with R by Jerome Sueur

“The basic premise in calculating ASU is that we compute a Short-time discrete fourier transform for a given frequency bin size (obtained by dividing the sampling frequency/window length over which the fourier transform is computed, for example 48000Hz/256 = 187.5 is the bin size). However, the size of the frequency bin is inversely proportional to time (Uncertainty principle; Pg.312) which would mean a bin size of 187.5 corresponds to a time duration of 0.005s (1/187.5=0.005s). Ultimately what matters is a compromise between frequency resolution and temporal resolution (Fig 11.3; Pg. 313).”

4.1 Install necessary libraries

library(seewave)

library(warbleR)

library(tuneR)

library(stringi)

library(tidyverse)

library(ggplot2)

library(RColorBrewer)

library(foreach)

library(doSNOW)

library(rlist)

library(tictoc)

library(patchwork)

# Source any custom/other internal functions necessary for analysis

source("code/01_internal-functions.R")Below, we have summarized approaches taken by previous studies:

Aide et al. 2017 aggregated recordings at time scale of hour of day and used a frequency bin size of 86.13 Hz and an amplitude filtering threshold of 0.02. So if the sampling rate is 22000 Hz, that would mean - 22000/86.13 ~ 256 frequency bins to divide up the frequency space. In this paper, there would be 24hr*256 bins = 6144 time/frequency bins.

Campos-Cerqueira et al. 2019 aggregated recordings at the time scale of hour of day (24 h), used a frequency bin size of 172 Hz, and an amplitude filtering threshold of 0.003. So if the sampling rate is 22000 Hz, that would mean - 22000/172 ~ 128 frequency bins. This resulted in a three‐dimensional (x = hour, y = acoustic frequency, z = proportion of all recordings in each time/frequency bin with a frequency peak value > 0.003 amplitude) matrix of acoustic activity with a total of 3,072 time/frequency bins (24 h × 128 frequency bins).

Campos-Cerqueira and Aide 2017 used the meanspec (f = 44,100, wl = 256, wn = “hanning”) and fpeaks (threshold = 0.1, freq = 172) function from the seewave package in R (Sueur et al., 2008a). The value of each peak was normalized using the maximum amplitude value within all recordings in the soundscape (i.e., site), and thus values ranged from 0 to 1. The number of frequency peaks was determined by counting the number of recordings with a peak within each of the 128 frequency bins that were equal or greater than the amplitude threshold. To control for the different number of recordings in each site and each time interval (i.e., hour), we divided the number of recordings with a peak in each time/frequency class by the total number of recordings collected during each hourly interval.

4.2 Load recordings across multiple days and sites

First, we examined all unique sites and dates for which we had a continuous 24 hours of recordings.

# List the path that contains all folders, which contain the audiomoth data

path <- "D:\\2020-summer\\" # change the path depending on where the raw data is

# Listing the folders within which .WAV files are stored

folders <- dir(path, recursive=F,full.names=T)

###### Please note ######

# The below set of lines need to be run only once for a given set of sites and days.

# Let's first rename the files by name of each site (as prefix)

for(i in 1:length(folders)){

setwd(folders[i])

# List the files within each folder and renaming the files with the prefix - SITE_ID

a <- list.files(paste0(path,basename(folders)[i],"\\"), full.names = T)

file.rename(from = a, to=paste0(basename(folders)[i],"_",basename(a)))

}

###### Ending note here ######

# Now get only those files for a full 24 hours across every unique site

files <- list()

for(i in 1:length(folders)){

a <- list.files(paste0(path,basename(folders)[i],"\\"), full.names = T)

site_date <- str_extract(basename(a),'\\w+_\\d+_')

# Choosing all 24 hours of data across every unique site (288 corresponds to 12 files every 1 hour)

for(j in 1:length(unique(site_date))){

dat <- a[str_detect(a,unique(site_date)[j])]

if((length(dat)<288)==TRUE){

next

} else {

files <- c(files, dat)

}

}

}

files <- unlist(files)4.3 Short-time discrete fourier transform (STDFT)

To compute an STDFT, we shall begin with an audio recording that has a sampling rate of s = 48,000 Hz and a window length (for the STDFT) wl = 256 samples, which results in a frequency bin size of z = 187.5 Hz (where z = s/wl). Given the sampling rate s, our Nyquist frequency f = 24,000 Hz (where f = s/2), and therefore, the total number of frequency bins across which an STDFT was run is n = 128 frequency bins (n = f/z). The final output of an STDFT corresponds to a matrix of N * m fourier coefficients (N = length of the audio recording and m = number of frequency bins). In this study, we computed STDFT across 24 hours of audio recordings, which translates to a matrix of 3072 (24 hours * 128 bins) fourier coefficients (Campos-Cerqueira et al. 2019). This matrix of coefficients essentially corresponds to ASU (or a measure of space ’used, for every hour of a day across the 128 bins in this study). Prior to computing STDFTs and ASU values, we set a threshold amplitude of 0.003 dB for audio recordings (following Campos-Cerqueira et al. 2019 and examination of our data).

To calculate ASU, we selected five consecutive days of acoustic data (24 continuous hours of data) across each site. Five days was the minimum number of days of consecutive sampling without disruptions across sites (eg. memory card switching/battery replacements/failure of equipment etc.).

# Select all unique site_date combinations for each unique site

site_date <- str_extract(basename(files),'\\w+_\\d+_')

unique(site_date)

# The only step that involves sub-selection (Selecting 5 days across each site; Note: IPTO06R has 3 days sampled and OLV110R has only 2 days sampled)

site_date <- unique(site_date)[c(1:5,10:14,19:23,28:47,52:61,71:75,

86:90,97:101,107:111,118:122,130:134,

141:143,150:154,161:165,173:177,188:189,

193:197,202:206,211:215,219:223,225:229,

231:235,237:241,249:253,261:265,269:273,

281:285,289:293,300:304,309:313,319:323,

329:333,340:344,351:355,361:365,370:374,

378:382,387:391,396:400)]

# Create a sequence of numbers to combine files by 1 hour

hour_seq <- seq(from=0,to=288, by=12)

# To name files with a suffix for each hour

time_of_day <- c("00:00-01:00","01:00-02:00","02:00-03:00","03:00-04:00",

"04:00-05:00","05:00-06:00","06:00-07:00","07:00-08:00",

"08:00-09:00","09:00-10:00","10:00-11:00","11:00-12:00",

"12:00-13:00","13:00-14:00","14:00-15:00","15:00-16:00",

"16:00-17:00","17:00-18:00","18:00-19:00","19:00-20:00",

"20:00-21:00","21:00-22:00","22:00-23:00","23:00-24:00")

# Loading parameters necessary for the Short-term fourier transform to be performed on hourly aggregates of data for each site_date combination

f <- 48000

wl <- 256 # This window length should be changed as a function of the frequency resolution (ie. bin size) and temporal resolution (ie. time)

ovlp <- 50

wn <- "hanning"

# Store the 24 hour acoustic space use data in a list and name it by a unique site and date

site_date_asu <- list()

# Add a progress bar for the loop

pb <- txtProgressBar(

min = 0,

max = length(unique(site_date)),

style = 3

)

# Select only 24 hours of data (00:00:00 to 23:55:00) for every unique site-date

for(i in 1:length(unique(site_date))){

tic("Total time for a single site-day combination")

# Store the acoustic space use data in a data.frame for plotting and analysis

space_use <- data.frame()

# Extract the strings first by site

dat <- files[stringr::str_detect(files,unique(site_date)[i])]

# Parallelize the runs

cl <- makeCluster(6, type = "SOCK")

registerDoSNOW(cl)

# Store the each hour of data for an entire day as a list here (raw audio files read by tuneR::readWave())

tic("Reading in .WAV files")

dailydata <- foreach(k=1:length(dat), .combine = 'c', .inorder = T, .packages = 'tuneR') %dopar% {

r <- readWave(dat[k])

}

toc()

gc()

stopCluster(cl)

# renaming the files to ensure that data is read in hour by hour and stored in a separate object called hourlydata

# Below we read the first 12 files in and then save it after computing the STDFT and then repeating it for the next 12 files and so on

for(t in 1:(length(hour_seq)-1)) { # Every 12 files correspond to one hour

tic("Computing Short-term fourier transforms for each hour")

if (t == 1) {

hourlydata <- dailydata[hour_seq[t]:hour_seq[t+1]]

} else {

hourlydata <- dailydata[(hour_seq[t]+1):hour_seq[t+1]]

}

gc()

# Parallelize the runs

cl <- makeCluster(6, type = "SOCK")

registerDoSNOW(cl)

# Store every hour's ASU data here

data_per_hour <- foreach(z = 1:length(hourlydata), .combine = 'rbind',

.inorder=T, .packages = 'seewave') %dopar% {

wave <- hourlydata[[z]]

n <- length(wave)

## Short-term Fourier transform (using a seewave internal function)

m <- sspectro(wave, f = f, wl = wl, ovlp = ovlp, wn = wn)

# Frequency selection and frequency axis

# Here, want only a sequence of numbers that correspond to the length of rows # of the short-time fourier transform and we divide it by 1000 to get values # in kHz

freq <- seq(0, (f/2) - (f/wl), length.out = nrow(m))/1000

# Calculate acoustic space use per frequency bin

f.cont <- apply(m, MARGIN = 1, FUN = sum)

# Store the space use data in a dataframe for plotting later

a <- data.frame(freq, f.cont)

}

rm(hourlydata); gc()

data_per_hour <- data_per_hour %>%

group_by(freq) %>%

summarise(f.cont=sum(f.cont))

data_per_hour$f.cont <- (data_per_hour$f.cont)/12

data_per_hour$time_of_day <- time_of_day[t]

space_use <- rbind(data_per_hour, space_use)

stopCluster(cl); gc()

toc()

}

space_use <- as.data.frame(space_use)

site_date_asu <- c(site_date_asu,list(space_use))

names(site_date_asu)[i] <- unique(site_date)[i]

rm(space_use, dailydata, data_per_hour); gc()

setTxtProgressBar(pb, i)

toc()

}

close(pb)

# store the list object for later

list.save(site_date_asu, "data/site_date_asu.rdata")

# Save the list of dataframes for future analysis

siteByDayAsu <- bind_rows(site_date_asu, .id = "Site_Day")

# Write to .csv

write.csv(siteByDayAsu, "data/site-by-day-asu.csv", row.names = F) 4.4 Average acoustic space use

Now that we have calculated acoustic space use values for a given frequency bin size and time resolution for every unique site-date combination, we would like to obtain a single set of space use values for every unique site. In other words, we would like to average space use values across all frequency bins for five days.

# All unique sites

site <- str_extract(basename(files),'^([[:alnum:]])+')

unique(site)

# Store the site-wise ASU values

site_asu <- list()

# Loop through site-wise data and average data for each site

for(i in 1:length(unique(site))) {

# Extract data needed for every unique site

dat <- site_date_asu[stringr::str_detect(names(site_date_asu),unique(site)[i])]

# Sum up values of acoustic space use across X no. of days for every unique site

asu_sum <- dat %>%

bind_rows(.id="data") %>%

group_by(freq, time_of_day) %>%

summarize(f.cont.sum=sum(f.cont))

# Averaging data as a function of number of days for a given site over which data was summed

asu_sum$f.cont.sum <- (asu_sum$f.cont.sum)/length(dat)

# Scaling the data between 0 to 1 for the sake of comparison across sites

asu_sum$f.cont.sum <- range01(asu_sum$f.cont.sum)

asu_sum <- as.data.frame(asu_sum)

site_asu <- c(site_asu, list(asu_sum))

names(site_asu)[i] <- unique(site)[i]

rm(dat,asu_sum)

}

# store the list object for later

list.save(site_asu, "data/site_asu.rdata")

# Save the list of dataframes for future analysis

siteWiseAsu <- bind_rows(site_asu, .id = "Site")

# Write to .csv

write.csv(siteWiseAsu, "data/site-wise-asu.csv", row.names = F)4.5 Visual examination of ASU

We will use ggplot2 to plot and save individual ASU plots for each unique site-date combination. This will then be repeated for each unique site (calculated in the previous chunk of code)

# Saving the ggplots to a folder for every unique site-date combination

for(i in 1:length(site_date_asu)){

# Get the data out from a list to a dataframe (else ggplot won't accept it)

dat <- data.frame(site_date_asu[i])

names(dat) <- c("freq","f.cont","time_of_day")

# Plot the data

g1 <- ggplot(dat, aes(x=time_of_day, y=freq)) +

geom_tile(aes(fill = f.cont)) +

scale_fill_gradientn(colours = brewer.pal(9,"Reds"))+

# scale_fill_scico(palette = "vikO") +

theme_bw() +

labs(x="Time of Day (in hours)",

y="Frequency (in kHz) ") +

theme(axis.title = element_text(size = 16, face = "bold"),

axis.ticks.length.x = unit(.5, "cm"),

axis.text = element_text(size = 14),

axis.text.x = element_text(angle=90, vjust=0.5, hjust=0.5),

legend.title = element_blank(),

legend.key.size = unit(1,"cm"),

legend.text = element_text(size = 12))

# Save the data to a folder

ggsave(filename="",width=12, height=7, units="in", dpi = 300,

plot=g1, device="png", path = paste("figs/siteByDayAsu/", paste(names(site_date_asu)[i], ".png", sep=""), sep=""))

}

# Saving the ggplots to a folder for every unique site

for(j in 1:length(site_asu)){

# Get the data out from a list to a .dataframe (else ggplot won't accept it)

dat <- data.frame(site_asu[j])

names(dat) <- c("freq","time_of_day","f.cont.sum")

# Plot the data

g1 <- ggplot(dat, aes(x=time_of_day, y=freq)) +

geom_tile(aes(fill = f.cont.sum)) +

scale_fill_gradientn(colours = brewer.pal(9,"Reds"))+

# scale_fill_scico(palette = "vikO") +

theme_bw() +

labs(x="Time of Day (in hours)",

y="Frequency (in kHz) ") +

theme(axis.title = element_text(size = 16, face = "bold"),

axis.ticks.length.x = unit(.5, "cm"),

axis.text = element_text(size = 14),

axis.text.x = element_text(angle=90, vjust=0.5, hjust=0.5),

legend.title = element_blank(),

legend.key.size = unit(1,"cm"),

legend.text = element_text(size = 12))

# Save the data to a folder

ggsave(filename="",width=12, height=7, units="in", dpi = 300,

plot=g1, device="png", path = paste("figs/siteWiseAsu/", paste(names(site_asu)[j], ".png", sep=""), sep=""))

}4.6 Representative figure for publication

# load .rdata to plot select figures for publication

site_asu <- load("data/site_asu.rdata")

site_asu <- bind_rows(site_asu, .id="Site")

# Selecting three representative sites (PANMP1B, INBS04R, INBS04U)

bm <- site_asu %>%

filter(Site=="PANMP1B")

ar <- site_asu %>%

filter(Site=="INBS04R")

nr <- site_asu %>%

filter(Site=="INBS04U")

# create separate figures for benchmark, active and passive sites and then patchwork them

# benchmark site

fig_bm <- ggplot(bm, aes(x=time_of_day, y=freq)) +

geom_tile(aes(fill = f.cont.sum)) +

scale_fill_gradientn(colours = brewer.pal(9,"Reds"))+

theme_bw() +

labs(fill = "Acoustic Space Use\n") +

theme(axis.title = element_blank(),

axis.ticks.length.x = unit(.5, "cm"),

axis.text = element_text(family="Century Gothic",size = 14),

axis.text.x = element_text(angle=90, vjust=0.5, hjust=0.5),

legend.title = element_text(family="Century Gothic",

size = 14, face = "bold"),

legend.key.size = unit(1,"cm"),

legend.text = element_text(family="Century Gothic",size = 14)) +

annotate(geom = "text", x = max(bm$time_of_day), y = 23, label = "Benchmark site", hjust = 1, family = "Century Gothic", size=5, fontface="bold")

# actively restored site

fig_ar <- ggplot(ar, aes(x=time_of_day, y=freq)) +

geom_tile(aes(fill = f.cont.sum)) +

scale_fill_gradientn(colours = brewer.pal(9,"Reds"))+

theme_bw() +

labs(x="\nTime of Day (in hours)",

fill = "Acoustic Space Use\n") +

theme(axis.title = element_text(family="Century Gothic",

size = 14, face = "bold"),

axis.ticks.length.x = unit(.5, "cm"),

axis.text = element_text(family="Century Gothic",size = 14),

axis.text.x = element_text(angle=90, vjust=0.5, hjust=0.5),

axis.title.y = element_blank(),

legend.position = "none") +

annotate(geom = "text", x = max(ar$time_of_day), y = 23, label = "Actively restored site", hjust = 1, family = "Century Gothic", size=5, fontface="bold")

# naturally regenerating site

fig_nr <- ggplot(nr, aes(x=time_of_day, y=freq)) +

geom_tile(aes(fill = f.cont.sum)) +

scale_fill_gradientn(colours = brewer.pal(9,"Reds"))+

theme_bw() +

labs(y="Frequency (in kHz)\n",

fill = "Acoustic Space Use\n") +

theme(axis.title = element_text(family="Century Gothic",

size = 14, face = "bold"),

axis.ticks.length.x = unit(.5, "cm"),

axis.text = element_text(family="Century Gothic",size = 14),

axis.title.x = element_blank(),

axis.text.x = element_text(angle=90, vjust=0.5, hjust=0.5),

legend.position = "none") +

annotate(geom = "text", x = max(nr$time_of_day), y = 23, label = "Naturally regenerating site", hjust = 1, family = "Century Gothic", size=5, fontface="bold")

# patchwork the above three for the Fig. 3

fig_asu <- fig_nr + fig_ar + fig_bm

ggsave(fig_asu, filename = "figs/fig3.png", width=52, height=17,device = png(), units="cm", dpi = 300); dev.off()

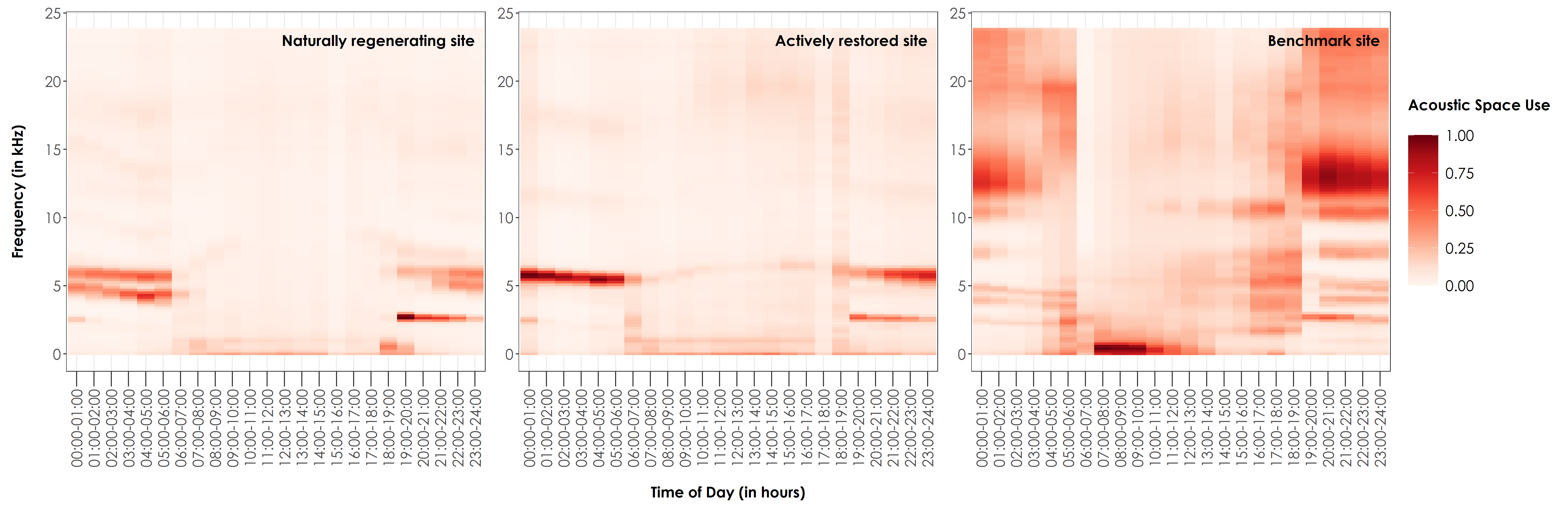

Visual examination of acoustic space use across a naturally regenerating, actively restored and benchmark site In this figure, we visually examined ASU for each of the 128 frequency bins and 24 hours across each site and treatment type. Shown here are representative figures for an NR, AR, and BM site. In this visualization, we estimated the proportion of frequency space (values between 0 to 1) that was occupied by vocalizations/sounds above 0.003 dB for every single hour of recording across 24 hours in a day. We observed largely empty frequency bins between 12 kHz to 24 kHz for the majority of AR and NR sites. For the sake of this representative figure, we show the average ASU calculated across five days for each site. However, the patterns described here are broadly consistent across days and sites (Supporting Information). In the above figure, BM = undisturbed benchmark rainforest sites, AR = Actively restored forest sites, and NR = Naturally regenerating forest sites.